Capturing information is useless unless you know how to use it. Here’s what I use to get the most out of my basic trace of anything taking over so many seconds. This is also my template for more invasive traces, and it gets tweaked on a case-by-case basis.

It’s my typical style with half of it commented out where less run portions can be highlighted and run and portions of my WHERE clause don’t have to be rewritten to be added in. The first line gets highlighted as soon as I open this script every time so I can run it, copy the path of the trace I’m looking for, and paste it into my main script. As for the WHERE clause, call me lazy or whatever it is you call people who can’t remember the right word, but I don’t always remember the exact wording of each column name that I want to filter by. I simply uncomment them as I need them.

The only thing I can say is really impressive here is finding the job names, which is a piece of this script I will never forget figuring out. Other than that, just little things to help readability when both running this script and when playing with the WHERE clause. I can’t tell you why duration is microseconds and CPU is milliseconds, but I can say the SELECT and WHERE clauses are set up to use the same units I would use when I talk to someone.

--SELECT * FROM sys.traces /* --sp_trace_setstatus id, status exec sp_trace_setstatus 3,0 --stop exec sp_trace_setstatus 3,2 --close --0 stop --1 start --2 close */ --query running trace SELECT TOP 10000 Database_Name = DB_Name(DatabaseID) , DatabaseName , starttime , endtime , textdata = cast(textdata as varchar(4000)) , Duration_Sec = cast(duration/1000/1000.0 as Dec(10,1)) , CPU_Sec = cast(cpu/1000.0 as Dec(10,1)) , Reads_K = cast(reads/1000.0 as Dec(10,0)) , Writes_K = Cast(writes/1000.0 as Dec(10,1)) , hostname , LoginName , NTUserName , ApplicationName = CASE LEFT(ApplicationName, 29) WHEN 'SQLAgent - TSQL JobStep (Job ' THEN 'SQLAgent Job: ' + (SELECT name FROM msdb..sysjobs sj WHERE substring(ApplicationName,32,32)=(substring(sys.fn_varbintohexstr(sj.job_id),3,100))) + ' - ' + SUBSTRING(ApplicationName, 67, len(ApplicationName)-67) ELSE ApplicationName END , SPID , TE.* FROM fn_trace_gettable('D:\Traces\DurationOver10sec_110.trc',default) T LEFT JOIN sys.trace_events TE ON T.EventClass = TE.trace_event_id WHERE endtime > DATEADD(Hour, -3, GetDate()) --AND Duration > 1000000 * 15 --in seconds --AND CPU > 1000 * 10 --in seconds --AND Writes > 10000 --AND Reads > 1000000 --AND te.category_id = 2 --Auto Grow/Shrink found in default trace --AND t.databaseid = DB_ID('master') --AND t.DatabaseName = 'tempdb' --AND t.LoginName <> 'shood' --AND t.textdata like '%%' ORDER BY endTime DESC, starttime DESC

So, for those of you not too familiar with tracing, what does all of this mean and what am I looking for? It depends on what’s wrong. The day after an app’s upgrade I’m looking for procs that snuck through testing and are killing the server. When a user complains about performance you might see a new proc showing up because it’s getting a bad execution plan. If tempdb or the logs fill up I can tell you what had 100,000 writes and just happened to end at that exact moment. Sometimes I’ll glance to see if anything is hitting or approaching the app’s 30 second timeout.

A general trace such as the RPC:Completed and SQL:BatchCompleted that take over X seconds won’t answer all of your questions, but it will be a good start. The most important things are to always have something running (could be extended events instead of a trace) to tell you the big things that are running and to have your monitoring limited enough where you aren’t the one slowing down the server. Make sure it’s customized to each server since a 1 second duration in a web app is a big deal, but other servers you would get flooded with results unless you bump it up to 10 seconds. You can run more invasive traces when you need them.

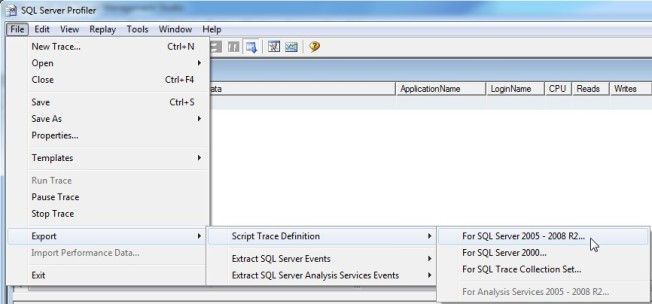

There are two hits against extended events where I’m even using traces for this. First, I still have too many SQL 2005 servers around and like to do things as consistent as reasonably possible across all servers to make it simple. Second, I’m still not too happy about extended events skipping SQL:BatchCompleted in SQL 2008 as that misses out on finding large loops of small statements. Extended events are much more efficient, can do a lot that traces can’t do, but aren’t a 100% replacement for traces.

Comment on my posts, let me know what you think. Every post I have at Simple SQL Server is written to myself 5 years ago, and is exactly what I wish I had back then in my own mind. The key words being “in my own mind”, and I’d like to hear what’s on your mind. Be it constructive criticism on this post or a completely separate SQL Server subject you wish you knew more about either now or 5 years ago that you’d like to see thrown up for everyone to use, I’ll never know until you tell me.

Related articles

- Tracing Introduction (simplesqlserver.com)

- SQL Server Running Slow (simplesqlserver.com)